Nieman Journalism Lab |

- Young-adult readers may have abandoned print, but they’ll take news in their pockets

- Nick Diakopoulos: Understanding bias in computational news media

- No sleep till: Technically Media’s next expansion stop is Brooklyn

| Young-adult readers may have abandoned print, but they’ll take news in their pockets Posted: 10 Dec 2012 09:01 PM PST Since the rise of the Internet, print media — most notably newspapers — have faced a big problem with younger readers. But according to a new study released today by the Pew Research Center, when you look specifically at the devices they love — the smartphones in their pockets — young adults rival or even surpass their parents and grandparents as news consumers. According to the report from Pew’s Project in Excellence in Journalism, 37 percent of smartphone owners between the ages of 18 and 29 get news on their devices daily, along with 40 percent of smartphone owners aged 30 to 49. Those are slightly higher than the equivalent rates for 50-64 (31 percent) and 65-plus (25 percent). Among tablet owners, news consumption numbers were broadly similar across age groups, with 50- to 64-year-olds being the peak news consumers. There’s more good news for media companies hoping to reach younger readers: They are more likely to share the news they read on mobile devices and to engage with ads on smartphones and tablets. For ads in particular, readers 18-29 were twice as likely to “at least sometimes” touch an ad on a tablet than people 30-49. Use of mobile devices has been on the rise for some time, as has growing use of phones and tablets as the main method of going online. Pew’s new findings again reinforce the importance of mobile to the future of journalism, but it also points to new opportunities for media companies. The data comes from a survey of 9,513 adults, 4,638 of whom owned a mobile device, between June and August of this year. Pew found many positive signs when it comes to reading on mobile devices: Over a third of the survey respondents said they got news daily on tablet or smartphone. A quarter of 18-29 year-olds tablet owners surveyed said they read ebooks on them daily, higher than people in any other age group. Almost one third of users under 50 said they “sometimes” read archived magazine articles on their tablet. Overall, Pew found young men were the most active news consumers on tablets and smartphones, reading in-depth articles, watching video, and checking news back with the news multiple times during the day. One thing the report makes clear is paying for news remains a tough sell for many readers. As more newspapers incorporate digital subscription plans, readers are facing a number of options for paying for news:

As for subscriptions, the survey reinforces some things we already know: Older people are more likely to pay for news. According to Pew, people over 50 were almost twice as likely as those under 50 to have a print-only subscription. The 50-plus crowd were also more likely to have a print/digital combo: 20 percent of those surveyed said they have bundled subscriptions, compared to only 12 percent of people under 50. A minor bright spot? People under 50 were more likely to go digital only — but only 9 percent said they had digital subscriptions, compared to 8 percent of those over 50.

A sign that may not bode well for the idea of young people’s habits changing in a pro-news direction:

There was an area of agreement across all age groups in the report on the reading experience and design of tablet apps. The survey found that both people over 40 and under 40 preferred a “print-like” experience rather than a more interactive layout. (Interesting data in the final week of The Daily’s existence.) Similar to previous surveys from Pew, readers said they preferred reading on mobile browsers to native apps. But there was good news for fans of native apps as well.

Finally, as in other surveys, Pew found that iPad owners engaged with their devices at a significantly higher rate than owners of Android tablets did. Those with iPads were more likely to use their devices multiple times per day (54 percent to 33 percent for Android) and to use them to consume news daily (48 percent vs. 35 percent). Image from Steve Rhodes used under a Creative Commons license. |

| Nick Diakopoulos: Understanding bias in computational news media Posted: 10 Dec 2012 09:00 AM PST

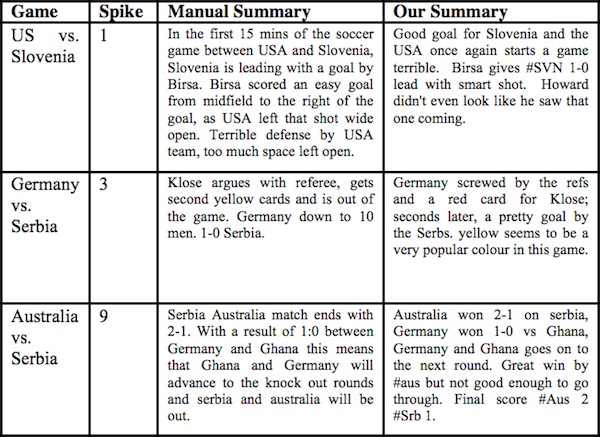

The Google News algorithm lists its criteria for ranking and grouping news articles as frequency of appearance, source, freshness, location, relevance, and diversity. Millions of times a day, the Google News algorithm are making editorial decisions using these criteria. But in the systematic application of its decision criteria, the algorithm might be introducing bias that is not obvious given its programming. It can be easy to succumb to the fallacy that, because computer algorithms are systematic, they must somehow be more “objective.” But it is in fact such systematic biases that are the most insidious since they often go unnoticed and unquestioned. Even robots have biases. Any decision process, whether human or algorithm, about what to include, exclude, or emphasize — processes of which Google News has many — has the potential to introduce bias. What’s interesting in terms of algorithms though is that the decision criteria available to the algorithm may appear innocuous while at the same time resulting in output that is perceived as biased. For example, unless directly programmed to do so, the Google News algorithm won’t play favorites when picking representative articles for a cluster on a local political campaign — it’s essentially non-partisan. But one of its criteria for choosing articles is “frequency of appearance.” That may seem neutral — but if one of the candidates in that race consistently got slightly more media coverage (i.e. higher “frequency of appearance”), that criterion could make Google News’ output appear partisan. Algorithms may lack the semantics for understanding higher-order concepts like stereotypes or racism — but if, for instance, the simple and measurable criteria they use to exclude information from visibility somehow do correlate with race divides, they might appear to have a racial bias. Simple decision criteria that lead to complex inclusion and exclusion decisions are one way that bias, often unwittingly, can manifest in algorithms. Other mechanisms through which algorithms introduce bias into news media can be illustrated by considering the paramount information process of summarization. Summarizing realityIn a sense, reporting is really about summarizing reality. You might protest: “It’s also about narrative and storytelling!” — and you’d be right, since few things are more boring as a dry summary. But before the story, the reporter has to first make decisions about which events to include, what context can safely be excluded, and what to emphasize as truly mattering — all of which have the potential to tint the story with bias. Reporters observe the world and uncover a range of information, only to later prune it down to some interesting yet manageable subset that fits the audience’s available time and attention. That’s summarization. Summarization is important because time and attention are two of the defining commodities of our age. Most of us don’t want or need the intricate details of every story; we’re often happy to instead have a concise overview of an event. This need to optimize attention and save us from the information glut is driving new innovations in how information gets processed and summarized, both in editorial processes as well as new computing algorithms. Circa is a startup in San Francisco working on an editorial process and mobile app which summarizes events into a series of “points” or factoids. They employ editors to collect “facts from a variety of sources” and convert them into “concise, easy-to-read ‘points’ in Circa,” as explained in their app’s help pages. To be fair, Circa thinks of themselves less as summarizers and more as storytellers who add value by stringing those concise “points” into a sequence that builds a story. The approach they use is driven by editors and is, of course, subject to all of the ways in which bias can enter into an editorial process, including both individual and organizational predilections. But what if Circa started adding algorithms which, instead of relying on editors, made decisions automatically about which points to include or exclude? They might start looking a bit more like London-based startup Summly, which has a new reading app populated with “algorithmically generated summaries from hundreds of sources.” Summly works by choosing the most “important” sentences from an article and presenting those as the summary. But how might this algorithm start to introduce bias into the stories it outputs, for instance, through its definition of “important”? In a story about the Israeli-Palestinian conflict, say, is it possible their algorithm might disproportionately select sentences that serve to emphasize one side over the other? We may never know how Summly’s algorithms might give rise to bias in the summaries produced; it’s a proprietary and closed technology which underscores the need for transparency in algorithms. But we can learn a lot about how summarization algorithms work and might introduce bias by studying more open efforts, such as scholarly research. I spoke to Jeff Nichols, a manager and research staff member at IBM Research, who’s built a system for algorithmically summarizing sporting events based on just the tweets that people post about them. Nichols, a sports enthusiast, was really getting into the World Cup in 2010 when he started plotting the volume of tweets over time for the different games. He saw spikes in the volume and quickly started using his ad hoc method to help him find the most exciting parts of a game to fast forward to on his DVR. Volume spikes naturally occur around rousing events — most dramatically, goals. From there, Nichols and his team started asking deeper questions about what kinds of summaries they could actually produce from tweets. What they eventually built was a system that could process all of the tweets around a game, find the peaks in tweet activity, select key representative tweets during those peaks, and then splice together those tweets into short summaries. They found that the output of their algorithm was of similar quality to that of manually generated summaries (also based on tweets) when rated on dimensions of readability and grammaticality. The IBM system highlights a particular bias that can creep into algorithms though: Any bias in the data fed into the algorithm gets carried through to the output of the system. Nichols describes the bias as “whoever yells the loudest,” since their relatively simple algorithm finds salient tweets by looking at the frequency of key terms in English. The implications are fairly straightforward: If Slovenia scores on a controversial play against the U.S., the algorithm might output “The U.S. got robbed” if that’s the predominant response in the English tweets. But presumably that’s not what the Slovenians tweeting about the event think about the play. It’s probably something more like, “Great play — take that U.S.!” (In Slovenian of course). Nichols is interested in how they might adapt their algorithm to take advantage of different perspectives and generate purposefully biased summaries from different points of view. (Could be a hit with cable news!) In making decisions about what to include or exclude in a summary, algorithms usually have to go through a step which prioritizes information. Things with a lower priority get excluded. The IBM system, for example, is geared towards “spiky” events within sports. This generally works for finding the parts of a game that are most exciting and which receive a lot of attention. But there are other interesting stories simmering below the threshold of “spikiness.” What about the sweeper who played solid and consistent defense but never had a key play that garnered enough tweets to get detected by the algorithm? That part of the event, of the story, would be left out. The IBM algorithm not only prioritizes information but it also has to make selections based on different criteria. Some of these selections can also be encoded as heuristics, which the algorithm’s creators embed to help the algorithm make choices. For instance, the IBM system’s programmers set the algorithm to prefer longer rather than shorter tweets for the summary, since the shorter tweets tended to be less-readable sentence fragments. That’s certainly a defensible decision to make, but Nichols acknowledges it could also introduce a bias: “Not picking comments from people who tend not to write in complete sentences could perhaps exclude an undereducated portion of the population.” This highlights the issue that the criteria chosen by programmers for selection or prioritization may correlate with other variables (e.g. education level) that could be important from a media bias point of view. Beyond summarization: optimization, ranking, aggregationSummarization is just one type of information process that can be systematized in an algorithm. In your daily news diet, it’s likely that a variety of algorithms are touching the news before you even lay eyes on it. For example, personalization algorithms like those used by Zite, the popular news reading application, systematically bias content towards your interests, at the expense of exposing you to a wider variety of news. Social Flow is a startup in New York that uses optimization algorithms to determine the exact timing of when to share news and content on social networks so that it maximally resonates with the target audience. Optimization algorithms can also be used to determine the layout of a news page. But optimizing layout based on one criteria, like number of pageviews, might have unintended consequences, like consistently placing scandals or celebrity news towards the top of the page. Again, the choice of what metrics to optimize, and what they might be correlated with, can have an impact. Another class of algorithms that is widespread in news information systems is ranking algorithms. Consider those “top news” lists on most news homepages, or how comments get ranked, or even how Twitter ranks trends. Twitter trends in particular have come under some scrutiny after events that people thought should appear, like #occupywallstreet or #wikileaks, didn’t trend. But, like Summly, Twitter is not transparent about the algorithm it uses to surface trends, making it hard to assess what the systematic biases in that algorithm really are and whether any heuristics or human choices embedded in the algorithm are also playing a role. Google also uses ranking algorithms to sort your search results. In this case, the ranking algorithm is susceptible to the same type of “whoever yells the loudest” bias we heard about from Nichols. The Internet is full of SEO firms trying to game Google’s algorithm so that certain content will appear high in search results even if, perhaps, it doesn’t deserve to be there. They do this in part by associating certain keywords with the target site and by creating links from many other sites to the target site. There are others who seek to manipulate search rankings. Takis Metaxis, a professor at Wellesley College, with colleague Eni Mustafaraj has written about “googlebombing,” the act of creating associations between political actors, such as George W. Bush, with a negative search term, like “miserable failure,” so that the person shows up when that phrase is searched for. This is a perfect example of how biasing the data being fed into an algorithm can lead to a biased output. And when the data feeding into an algorithm is public, the algorithm is left open to manipulation. Not all algorithmic bias has to be detrimental. In fact, if algorithms could somehow balance out the individual or cognitive biases that each of us harbor, this could positively impact our exposure to information. For instance, at the Korea Advanced Institute of Science and Technology (KAIST), Souneil Park and his collaborators have been experimenting with aggregation algorithms that feed into a news presentation called NewsCube, which nudges users towards consuming a greater variety of perspectives. Forget leaving things to chance with serendipity — their research is working on actively biasing your exposure to news in a beneficial way. Richard Thaler and Cass Sunstein, in their book Nudge, call this kind of influence “libertarian paternalism” — biasing experiences to correct for cognitive deficiencies in human reasoning. Not only can algorithms bias the content that we consume — someday they might do so in a way that makes us smarter and less prone to our own shortcomings in reasoning. Perhaps an algorithm could even slowly push extremists towards the center by exposing them to increasingly moderate versions of their ideas. Algorithms are basically everywhere in our news environment, whether it’s summarization, personalization, optimization, ranking, association, classification, aggregation, or some other algorithmic information process. Their ubiquity makes it worth reflecting on how these processes can all serve to systematically manipulate the information we consume, whether that be through embedded heuristics, the data fed into them, or the criteria used to help them make inclusion, exclusion, and emphasizing decisions. Algorithms are always going to have to make non-random inclusion, exclusion, and emphasizing decisions about our media in order to help solve the problem of our limited time and attention. We’re not going to magically make algorithms “objective” by understanding how they introduce bias into our media. But we can learn to be more literate and critical in reading computational media. Computational journalists in particular should get in the habit of thinking deeply about what the side effects of their algorithmic choices may be and what might be correlated with any criteria their algorithms use to make decisions. In turn, we should be transparent about those side effects in a way that helps the public judge the authority of our contributions. Nick Diakopoulos is a NYC-based consultant specializing in research, design, and development for computational media applications. His expertise spans human-computer interaction, data visualization, and social media analytics. You can find more of his research online at his website. |

| No sleep till: Technically Media’s next expansion stop is Brooklyn Posted: 10 Dec 2012 07:00 AM PST

NEW YORK — News organizations have long seen value in their ability to connect people: linking citizens to public officials, advertisers to readers, and so on. But in today’s nichified media world, media companies are finding it worthwhile to forge connections that are segmented in the same ways content now is. That can be as simple as having your political columnist host a political trivia night for readers — or it can be at the heart of your business model. For Technically Media, it’s the latter. The tech news startup gets only about a tenth of its revenue from traditional advertising; its money comes from being useful to the entrepreneurial communities in the cities it covers.

Bringing together tech-minded East Coasters is driving the tech news startup’s latest expansion plan. Technically Media launched as Technically Philly in Philadelphia four years ago and expanded to Baltimore over the summer. Now, cofounder Brian James Kirk says they’ll launch Technically Brooklyn in the first half of 2013 — they’re looking for founding sponsors now — then Technically Boston and Technically D.C. shortly thereafter. (Oh, hey, they already have the related Twitter accounts.) As the brand grows, it’s morphing. “Editorial coverage is still going to be a vital part of what we do, and I think it’s kind of a differentiator for us,” Kirk said. “But I can’t say for sure how the team will come together as we approach new markets. Content is what drives interest. We’ve always considered ourselves journalists. We’ve always been interested in hyperlocal coverage and being connected to the community.” The community, as Kirk sees it, doesn’t just mean the tech scene in Philly or the tech scene in Baltimore. Instead, he envisions a tech corridor that runs from Washington, D.C., all the way up to Boston. (Kind of like Amtrak’s Northeast Regional, only with better wifi.) Technically Media hopes providing a multi-city tech connection is what will set it apart — especially as it’s trying to make a name for itself in cities where the local-tech-coverage scene is significantly more crowded than Philly’s and Baltimore’s were. Kirk and his team bandied about the idea of focusing their expansion in underserved cities like Detroit and New Orleans. Places like New York have no shortage of tech writers. In Boston, the field already includes BostInno, Xconomy, The Hive, and more. Still, Kirk says he doesn’t see Brooklyn as a next-level proving ground — the expansion to Baltimore was the true test, he insists — but rather a natural fit for a site that has heretofore focused on post-industrial cities with nascent tech communities. In other words, Brooklyn makes sense because it’s kind of the Philly of New York (with apologies to people from all of those cities, who surely cringe at the comparison). “Brooklyn really resonates to us in the way that it is a smaller part of a bigger community in New York,” Kirk said. “It has 2.5 million people. That reminds me a lot of Philadelphia. And there’s still a divide between affluent folks and less privileged folks, so there’s still a lot of issues to be covered in terms of digital access, how they’re approaching policy, how they’re trying to get tech companies to come, changing their infrastructure to support that — in many ways it reminded us of Philadelphia.” As cities along the East Coast grapple with the kinds of issues Technically Media covers, why not bring together leaders of those individual communities? What Kirk calls “the information exchange” across communities will become potentially more valuable than the city-specific “tech week” events that have been at the center of Technically Media’s business model so far. “How many similar conversations are happening?” Kirk said. “For example, in partnership with a Baltimore organization, we brought 20 to 30 technoogists from Baltimore up to Philly to tour [and discuss] digital divide issues. We brought the CIO of the city from Baltimore to meet with the CTO in Philadelphia. We organize the entire day, we toured the Baltimore folks through Philadelphia, and then we wrote about it in both markets. “We just think there’s a real need to create a mentality that the Northeast Corridor is very much connected and very much can be sharing resources. So you create that shared mentality that it’s not just Philly, it’s not just New York, it’s not just Boston, it’s not just D.C. It’s all these places working together.” Photo of the Brooklyn Bridge by Sue Waters used under a Creative Commons license. |

| You are subscribed to email updates from Nieman Journalism Lab To stop receiving these emails, you may unsubscribe now. | Email delivery powered by Google |

| Google Inc., 20 West Kinzie, Chicago IL USA 60610 | |

Consider

Consider